Make AI Tell You When It's Guessing

One simple prompt addition forces AI to label its confidence level. Stop treating all AI output as equally reliable when it's not.

You're treating AI's wild guesses the same as its solid answers. That's a problem.

When AI responds, it sounds confident. Always. Whether it's citing a Supreme Court case it actually knows or making up a statute that doesn't exist, the tone is identical. Calm. Assured. Authoritative.

That's dangerous for lawyers.

Here's the fix: Make AI tell you how sure it is.

The Confidence Label Prompt

Add this to your system prompt or paste it at the start of any conversation:

The Prompt

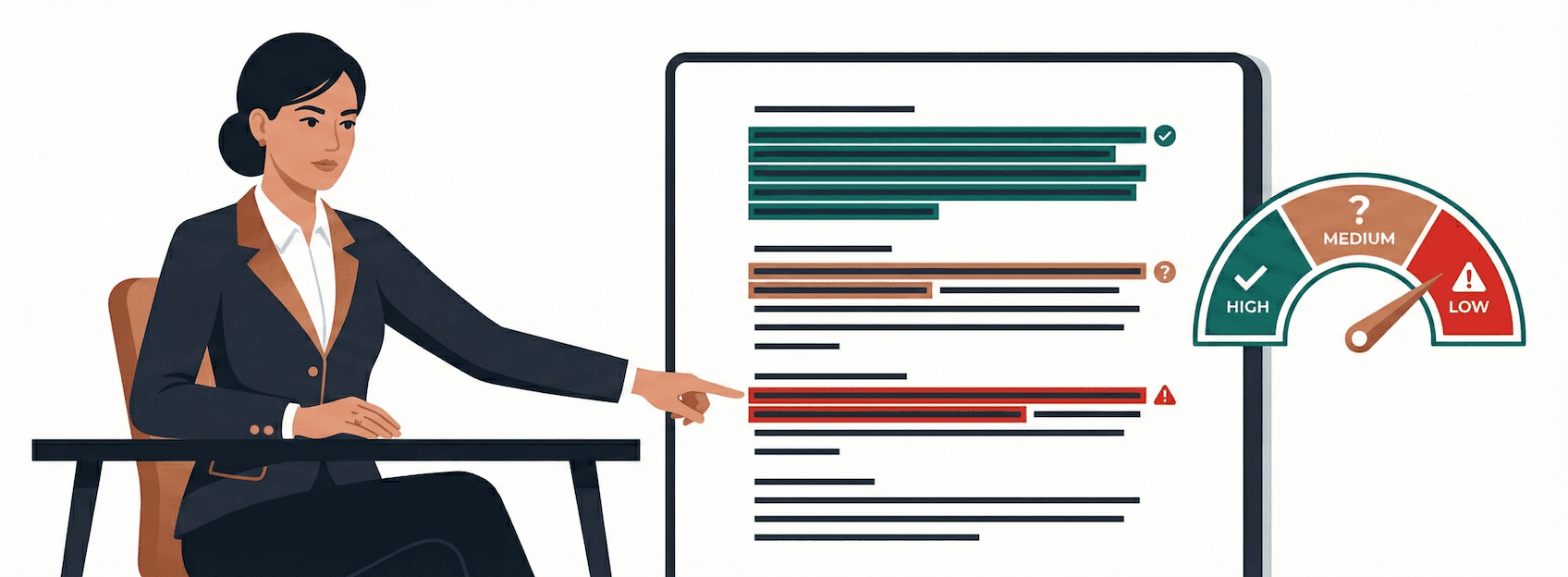

For every substantive claim, apply one of these confidence labels:

- HIGH CONFIDENCE - You're certain this is accurate based on established facts, law, or your training data

- MEDIUM CONFIDENCE - You believe this is correct but there's some uncertainty or you're extrapolating

- LOW CONFIDENCE - You're reasoning from limited information or making an educated guess

Flag anything where you're uncertain. I'd rather know you're guessing than discover it in court.

What This Actually Looks Like

Without confidence labels:

"The doctrine of laches typically bars claims brought after an unreasonable delay that prejudices the defendant. In most jurisdictions, the delay must be both unreasonable and prejudicial. Courts often look to the analogous statute of limitations as a benchmark."

Sounds solid. But which parts can you rely on?

With confidence labels:

"The doctrine of laches typically bars claims brought after an unreasonable delay that prejudices the defendant. (HIGH CONFIDENCE - well-established equitable doctrine) In most jurisdictions, the delay must be both unreasonable and prejudicial. (HIGH CONFIDENCE) Courts often look to the analogous statute of limitations as a benchmark. (MEDIUM CONFIDENCE - this is common but varies significantly by jurisdiction and context; verify for your specific court)"

Now you know where to focus your verification.

Why This Works

AI models are actually decent at self-assessment. They know when they're on solid ground versus when they're piecing together an answer from fragments. The problem is they don't tell you unless you ask.

When you add confidence labels:

-

AI becomes more careful. Knowing it has to justify its certainty makes it hedge appropriately instead of bulldozing through with false confidence.

-

You can triage your verification. Low confidence claims get immediate scrutiny. High confidence claims still get verified, but you're not treating everything as equally suspect.

-

Hallucinations become obvious. When AI has to label a made-up case citation, it often catches itself. "Smith v. Jones, 2019 (LOW CONFIDENCE - I'm not certain this case exists as cited)" is a lot more useful than a confident-sounding fabrication.

Make It More Aggressive

For legal research where accuracy is critical, dial up the pressure:

"If you're below HIGH CONFIDENCE on any legal citation, case name, statute, or rule number, say so explicitly and suggest I verify independently. I will verify anyway, but this helps me prioritize."

Or get even more direct:

"Do not cite specific cases unless you're certain they exist and you're accurately describing the holding. If you're uncertain, describe the legal principle instead and tell me to research case support myself."

The Expanded Version

For ongoing work, paste this more comprehensive version into your project instructions or system prompt:

Full Confidence Framework

Apply confidence labels to your responses:

- HIGH CONFIDENCE - Core legal principles, established doctrine, facts I'm certain about

- MEDIUM CONFIDENCE - Reasonable interpretation, likely correct but jurisdiction-dependent, or extrapolating from general principles

- LOW CONFIDENCE - Educated guess, reasoning from limited information, or speculation

For citations specifically:

- Only cite cases/statutes with HIGH CONFIDENCE that they exist and say what you claim

- If uncertain, describe the legal principle and note that supporting authority should be independently verified

- Never invent plausible-sounding citations

For strategy recommendations:

- Be explicit when you're offering one reasonable approach among several

- Flag assumptions that could change your analysis

Common Objections

"This makes responses longer and cluttered."

Good. Clarity beats brevity when accuracy matters. And you can always ask for a summary without labels after you've reviewed the detailed version.

"I don't trust AI's self-assessment."

You shouldn't trust it blindly. But AI's self-assessment is usually directionally correct. A low-confidence label is a useful signal even if it's not perfectly calibrated. Think of it as a first pass, not the final word.

"My workflow doesn't allow for long prompts."

The short version works fine: "Add confidence labels (high/medium/low) to your claims."

The Real Benefit

This prompt doesn't make AI more accurate. It makes AI's uncertainty visible.

That's the difference between walking into a deposition with research you think is solid and walking in knowing exactly which points you've verified versus which need backup.

You're still verifying everything important. You're still the lawyer. But now you're not flying blind about where the risks are.

Next conversation, add this line: "For every substantive claim, label your confidence as HIGH, MEDIUM, or LOW."

Watch how quickly you start spotting the gaps you would have missed.

Want more practical AI guidance?

Get actionable tips and strategies delivered weekly. No theory, just real-world implementation.