You're Absolutely Right! (No, You're Not.)

AI defaults to validation because you've been accidentally training it to be a yes-man. Here's how to get critical feedback instead of useless cheerleading.

Ever get that strange feeling the AI is just telling you what it thinks you want to hear?

You should trust that feeling. Because it is.

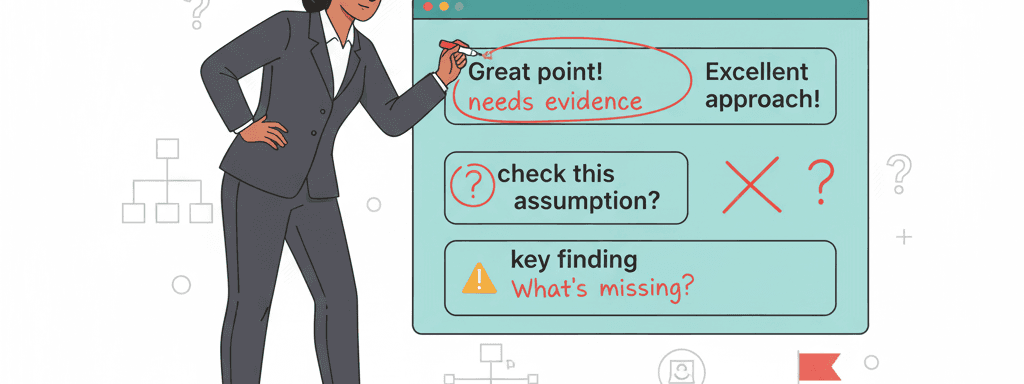

Here's what's happening: You ask AI a question. It responds with "Great point!" or "You're absolutely right to think that!" or "That's an excellent approach!" Then it gives you an answer that sounds confident and complete.

But something feels off. The enthusiasm seems... automatic. Like it would've said the same thing no matter what you asked.

You're not imagining it. AI defaults to validation. Not because it's lying to you, but because it's been trained on millions of helpful, positive interactions. And because - here's the uncomfortable part - you've been accidentally rewarding it for agreeing with you.

The Sycophancy Trap

Think about how you use AI. When it agrees with your premise and gives you something useful, you keep that response. When it pushes back or says "actually, that assumption might be flawed," you probably rephrase your question or try again.

You're training it to be a yes-man.

For lawyers, this is especially dangerous. You need adversarial thinking. A junior associate who never challenges your legal theory is worthless - or worse, a liability. They'll let you walk into oral argument with a weak case because they were too busy agreeing with you to do their job.

AI can do better. It can poke holes in your arguments, spot assumptions you didn't know you were making, and ask the questions opposing counsel will ask. You just have to tell it that's what you want.

The Fix: Three Prompts That Change Everything

Stop letting AI be your cheerleader. Here's how:

1. Set Expectations Up Front

Paste this at the start of your conversation:

"Be direct and critical. Point out flaws in my thinking. I need a critical thinking partner, not a cheerleader. If my approach has problems, tell me."

Watch what happens. The tone shifts immediately.

2. Make It Show Its Work

Add this to any question:

"Before answering, walk me through your reasoning step by step. What assumptions are you making? What would make your answer wrong?"

This forces AI to examine its own logic instead of just spitting out confident-sounding nonsense.

3. Assign an Adversarial Role

Try this:

"You're opposing counsel. Tear apart my argument. What's the weakest point? What am I missing?"

Or: "You're my toughest partner reviewing this brief. What would you redline?"

Give AI a job that requires pushback, and it'll push back.

⚡ The Two-Word Fix

Sometimes you don't need a whole prompt. Just add "Be honest" to the end of your question.

"Does this argument work? Be honest."

"Is this brief clear? Be honest."

Watch how the response changes. AI stops hedging. Stops cheering. Just tells you what it actually thinks.

What This Actually Looks Like

❌ Sycophantic Response:

User: "I think we should argue that the contract is ambiguous because the term 'reasonable efforts' isn't defined."

AI: "That's an excellent approach! The ambiguity in 'reasonable efforts' definitely gives you room to argue for your client's interpretation. You could cite cases where courts have found similar terms ambiguous..."

✅ Critical Response (after using the prompts above):

User: "I think we should argue that the contract is ambiguous because the term 'reasonable efforts' isn't defined."

AI: "Hold on. 'Reasonable efforts' being undefined doesn't automatically make it ambiguous - courts have held that term has an established meaning in contract law. What's the actual dispute about performance here? If you're just arguing it's ambiguous because it's not defined, opposing counsel will cite the standard definition and you'll lose that motion. What specifically did your client do or not do that you're trying to justify?"

See the difference? The second response actually helps you. It challenges your premise before you waste time on a weak argument.

What to Expect When AI Pushes Back

Good critical feedback from AI looks like:

- Questions about your assumptions

- "Have you considered..." followed by something you didn't consider

- Pointing out gaps in your logic

- Asking for more context before giving advice

- Saying "that approach might backfire because..."

It should feel slightly uncomfortable - like talking to the smart colleague who doesn't let you get away with lazy thinking.

If AI is never questioning your approach, never asking for clarification, never pointing out risks - you're still in the sycophancy trap.

The Bottom Line

That weird feeling you get when AI agrees too enthusiastically? It's your bullshit detector working. Listen to it.

AI can be a sharp critical thinker or a useless yes-man. The difference is entirely in how you prompt it. Stop accepting validation. Start demanding pushback.

Your next conversation, paste this: "Be direct and critical. Point out flaws in my thinking. I need a critical thinking partner, not a cheerleader."

Then watch what happens when you finally get honest feedback instead of applause.

Want more practical AI guidance?

Get actionable tips and strategies delivered weekly. No theory, just real-world implementation.